Tonight, I attended Leslie Odom Jr.'s stop in Naperville to discuss his book, Failing Up: How to Take Risks, Aim Higher, and Never Stop Learning, based on the mentoring work and speeches he's given at schools. He started the evening with a reading from his book, then had a conversation with Greta Johnsen of the Nerdette Podcast, a question-and-answer session with fans, and finally, a mini concert. When he sang "Without You" from Rent, I seriously got goosebumps. He followed that with "Wait For It" from Hamilton, and after much insistence from the audience, gave us an encore. He began by singing a gorgeous a cappella rendition of the opening verses of Bob Dylan's "Forever Young." When the guitar came in playing the opening strains of "Dear Theodosia" from Hamilton, I cried. It was so what I needed right then, it felt like a gift from the universe. And I cried all the way to the end and part of the way home. (It's been a rough week.)

I'm the owner of my own signed copy of Failing Up, and since I had an extra ticket, I have an extra signed copy I'll be donating to my choir for the silent auction at our upcoming benefit.

Saturday, March 31, 2018

Thursday, March 29, 2018

On to Round 2!

Like last year, I participated once again in the NYC Midnight Short Story Challenge. And like last year, I've advanced to round 2 of the competition. My short story - my first attempt at historical fiction - came in 3rd in my heat:

Round 2 assignments are released tonight, and I'll have the weekend to write a 2000 word story. I'm also still preparing for Blogging A to Z, which starts Sunday. A, B, C, H, and I plus a Statistics Sunday post are already written and scheduled, and I'll be putting together more in the next couple days. Basically, it seems I'll have a writing-filled weekend.

Also in April, I'm hoping my 500 word submission will show up in the WRiTE CLUB bouts. I sent in my 500 word submission along with my penname a couple of weeks ago. I learned yesterday that I won't receive any notification of whether my submission will appear - I have to check every day during the contest to see if I appear in a faceoff, which is a great way to encourage us to participate and vote. I also can't share if my submission appears, since I can't drive votes for my own submission (doing so would get me disqualified). But you can track the contest along with me. I'll share the direct link to the contest once it's released. If I advance, I'll have the opportunity to submit another 500 words later on.

Keep an eye out for some changes to Deeply Trivial in the next few days!

Round 2 assignments are released tonight, and I'll have the weekend to write a 2000 word story. I'm also still preparing for Blogging A to Z, which starts Sunday. A, B, C, H, and I plus a Statistics Sunday post are already written and scheduled, and I'll be putting together more in the next couple days. Basically, it seems I'll have a writing-filled weekend.

Also in April, I'm hoping my 500 word submission will show up in the WRiTE CLUB bouts. I sent in my 500 word submission along with my penname a couple of weeks ago. I learned yesterday that I won't receive any notification of whether my submission will appear - I have to check every day during the contest to see if I appear in a faceoff, which is a great way to encourage us to participate and vote. I also can't share if my submission appears, since I can't drive votes for my own submission (doing so would get me disqualified). But you can track the contest along with me. I'll share the direct link to the contest once it's released. If I advance, I'll have the opportunity to submit another 500 words later on.

Keep an eye out for some changes to Deeply Trivial in the next few days!

Sunday, March 25, 2018

Statistics Sunday: Countdown to Blogging A to Z

April 1st is one week away and Blogging A to Z will officially begin! (No fooling!)

I'll be blogging my way through the alphabet of R. Here are some past posts that might be useful to help prepare to analyze along with me - if you're so inclined:

I'll be blogging my way through the alphabet of R. Here are some past posts that might be useful to help prepare to analyze along with me - if you're so inclined:

- My favorite R packages will make many appearances next month

- The concept of correlation will come up more than once, and you'll want to understand different types of relationships

- I'll be demonstrating various regression tricks in R, but you can find basics on conducting a linear regression in this post, and this one will give some additional code for examining residuals

- Look for more information on factor analysis and how to interpret output and fit statistics

- Since my expertise is in psychometrics, look for more posts on that, including the concept of reliability (and the different types)

- Finally I'll show you tools for computing effect sizes and the basics of conducting meta-analysis with R

That's all for now!

Friday, March 23, 2018

Psychology for Writers: Your Happiness Set-Point

A few years ago, a former colleague was chatting with someone about the work she did with Veterans who had experienced a spinal cord injury. The person she was chatting with talked about how miserable she would be if that happened to her, even implying that she'd rather be dead than have a spinal cord injury. Many of us who have worked with people experiencing trauma probably have had similar conversations. People believe they would never be able to happy again if they experienced a life-changing event like a traumatic injury.

On the other hand, we've also heard people talk about how unbelievably happy they would be if they won the lottery or came into a great deal of money in some way.

But you might be surprised to know that researchers have been able to collect data from people both before and after these types of events - simply because they've recruited a large number of people for a study and some people in the sample happened to experience one of these life-changing events. And you might be even more surprised to know that people were generally wrong about how they would feel after these events. People who had experienced a traumatic injury had a dip in happiness but returned to approximately the same place they were before. And people who won the lottery had a brief lift in happiness followed also by a return to baseline.

These findings offer support for what is known as the set-point theory of happiness. According to this theory, people have a happiness baseline and while events may move them up or down in terms of happiness, they'll eventually return to baseline. Situationally influenced emotions are, for the most part, temporary. You might be sad about that injury, or breakup, or financial problem, or you might be elated about that promotion, or lottery win, or new relationship for a little while, but eventually, you revert to your usual level of happiness. Everyone has their own level. Some people are happier on average than others.

This theory also explains why people who are prone to depression need to seek some kind of treatment, often in the form of therapy and medication - interventions to increase your baseline level of happiness. Money or love or new opportunities may help in the short run, but what needs to be targeted is your baseline itself. Obviously events can target your baseline - a person may have an experience that changes their way of looking at the world, for better or worse. But events that only affect your mood and don't affect your thought process or reaction are unlikely to have any lasting effects.

This is important to keep in mind when writing your characters. Situations and events can obviously push their current mood around. But for a change to be permanent, it has to do more than simply make the person happy or sad - it has to change their mindset. A divorce might make a person sad. A divorce that changes how secure a person feels in relationships or leads them to distrust others might result in a permanent change. A lottery win might make a person happy. A lottery win that helps them become financially independent, get out of a bad situation, and completely change their way of life might result in a permanent change.

Think about how your character is changing and why, to make sure it's a believable permanent change and not just a temporary happiness shift. And if it's just temporary, know that it's completely believable for your character to work back to baseline on his or her own.

On the other hand, we've also heard people talk about how unbelievably happy they would be if they won the lottery or came into a great deal of money in some way.

But you might be surprised to know that researchers have been able to collect data from people both before and after these types of events - simply because they've recruited a large number of people for a study and some people in the sample happened to experience one of these life-changing events. And you might be even more surprised to know that people were generally wrong about how they would feel after these events. People who had experienced a traumatic injury had a dip in happiness but returned to approximately the same place they were before. And people who won the lottery had a brief lift in happiness followed also by a return to baseline.

These findings offer support for what is known as the set-point theory of happiness. According to this theory, people have a happiness baseline and while events may move them up or down in terms of happiness, they'll eventually return to baseline. Situationally influenced emotions are, for the most part, temporary. You might be sad about that injury, or breakup, or financial problem, or you might be elated about that promotion, or lottery win, or new relationship for a little while, but eventually, you revert to your usual level of happiness. Everyone has their own level. Some people are happier on average than others.

This theory also explains why people who are prone to depression need to seek some kind of treatment, often in the form of therapy and medication - interventions to increase your baseline level of happiness. Money or love or new opportunities may help in the short run, but what needs to be targeted is your baseline itself. Obviously events can target your baseline - a person may have an experience that changes their way of looking at the world, for better or worse. But events that only affect your mood and don't affect your thought process or reaction are unlikely to have any lasting effects.

This is important to keep in mind when writing your characters. Situations and events can obviously push their current mood around. But for a change to be permanent, it has to do more than simply make the person happy or sad - it has to change their mindset. A divorce might make a person sad. A divorce that changes how secure a person feels in relationships or leads them to distrust others might result in a permanent change. A lottery win might make a person happy. A lottery win that helps them become financially independent, get out of a bad situation, and completely change their way of life might result in a permanent change.

Think about how your character is changing and why, to make sure it's a believable permanent change and not just a temporary happiness shift. And if it's just temporary, know that it's completely believable for your character to work back to baseline on his or her own.

Thursday, March 22, 2018

Science Fiction Meets Science Fact Meets Legal Standards

Any fan of science fiction is probably familiar with the Three Laws of Robotics developed by prolific science fiction author, Isaac Asimov:

- A robot may not injure a human being or, through inaction, allow a human being to come to harm.

- A robot must obey orders given it by human beings except where such orders would conflict with the First Law.

- A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

Here’s a curious question: Imagine it is the year 2023 and self-driving cars are finally navigating our city streets. For the first time one of them has hit and killed a pedestrian, with huge media coverage. A high-profile lawsuit is likely, but what laws should apply?Here's the problem with those 3 laws: in order to follow them, the AI must recognize someone as human and be able to differentiate between human and not human. In the article, they discuss a case in which a robot killed a man in a factory, because he was in the way. As far as the AI was concerned, something was in the way and kept it from doing its job. It removed that barrier. It didn't know that barrier was human, because it wasn't programmed to do that. So it isn't as easy as putting a three-laws strait jacket on our AI.

At the heart of this debate is whether an AI system could be held criminally liable for its actions.

[Gabriel] Hallevy [at Ono Academic College in Israel] explores three scenarios that could apply to AI systems.

The first, known as perpetrator via another, applies when an offense has been committed by a mentally deficient person or animal, who is therefore deemed to be innocent. But anybody who has instructed the mentally deficient person or animal can be held criminally liable. For example, a dog owner who instructed the animal to attack another individual.

The second scenario, known as natural probable consequence, occurs when the ordinary actions of an AI system might be used inappropriately to perform a criminal act. The key question here is whether the programmer of the machine knew that this outcome was a probable consequence of its use.

The third scenario is direct liability, and this requires both an action and an intent. An action is straightforward to prove if the AI system takes an action that results in a criminal act or fails to take an action when there is a duty to act.

Then there is the issue of defense. If an AI system can be criminally liable, what defense might it use? Could a program that is malfunctioning claim a defense similar to the human defense of insanity? Could an AI infected by an electronic virus claim defenses similar to coercion or intoxication?

Finally, there is the issue of punishment. Who or what would be punished for an offense for which an AI system was directly liable, and what form would this punishment take? For the moment, there are no answers to these questions.

But criminal liability may not apply, in which case the matter would have to be settled with civil law. Then a crucial question will be whether an AI system is a service or a product. If it is a product, then product design legislation would apply based on a warranty, for example. If it is a service, then the tort of negligence applies.

Wednesday, March 21, 2018

Statistical Sins: The Myth of Widespread Division

Recently, many people, including myself, have commented on how divided things have become, especially for any topic that is even tangentially political. In fact, I briefly deactivated my Facebook account, and have been spending much less time on Facebook, because of the conflicts I was witnessing among friends and acquaintances. But a recent study of community interactions on Reddit suggests that only a small number of people are responsible for conflicts and attacks:

The researchers will be presenting their results at a conference next month. And they also make all of their code and data available.

User-defined communities are an essential component of many web platforms, where users express their ideas, opinions, and share information. However, despite their positive benefits, online communities also have the potential to be breeding grounds for conflict and anti-social behavior.So even though the conflict may appear to be a widespread problem, it really isn't, at least not on Reddit. Instead, it's only a handful of users (trolls) and communities. Here's the map they reference in their summary:

Here we used 40 months of Reddit comments and posts (from January 2014 to April 2017) to examine cases of intercommunity conflict ('wars' or 'raids'), where members of one Reddit community, called "subreddit", collectively mobilize to participate in or attack another community.

We discovered these conflict events by searching for cases where one community posted a hyperlink to another community, focusing on cases where these hyperlinks were associated with negative sentiment (e.g., "come look at all the idiots in community X") and led to increased antisocial activity in the target community. We analyzed a total of 137,113 cross-links between 36,000 communities.

A small number of communities initiate most conflicts, with 1% of communities initiating 74% of all conflicts. The image above shows a 2-dimensional map of the various Reddit communities. The red nodes/communities in this map initiate a large amount of conflict, and we can see that these conflict intiating nodes are rare and clustered together in certain social regions. These communities attack other communities that are similar in topic but different in point of view.

Conflicts are initiated by active community members but are carried out by less active users. It is usually highly active users that post hyperlinks to target communities, but it is more peripheral users who actually follow these links and particpate in conflicts.

Conflicts are marked by the formation of "echo-chambers", where users in the discussion thread primarily interact with other members of their own community (i.e., "attackers" interact with "attackers" and "defenders" with "defenders").

The researchers will be presenting their results at a conference next month. And they also make all of their code and data available.

Monday, March 19, 2018

A Very Timely Hamildrop

Just a few days ago, children across the country walked out of their schools to protest gun violence. On March 24, the March for Our Lives will take place in Washington D.C. and other communities, for a similar purpose. And today, Lin-Manuel Miranda and Ben Platt released the newest Hamildrop, Found Tonight, the proceeds of which will go to benefit March for Our Lives:

It's amazing how many issues currently being debated in this country can be found in Hamilton: immigration and the contribution of immigrants, and now gun violence and the death of our children. While the situation in which Alexander Hamilton lost his son Philip is different from what is happening in our country now, a parallel can still be made. Perhaps that is why Hamilton has resonated with so many people.

It's amazing how many issues currently being debated in this country can be found in Hamilton: immigration and the contribution of immigrants, and now gun violence and the death of our children. While the situation in which Alexander Hamilton lost his son Philip is different from what is happening in our country now, a parallel can still be made. Perhaps that is why Hamilton has resonated with so many people.

Sunday, March 18, 2018

Statistics Sunday: Blogging A-to-Z Theme Reveal

Tomorrow is the official day participants in the 2018 Blogging A-to-Z Challenge have been asked to announce their theme, but since my theme is statistically oriented and it's only one day, I'm doing it today! This year's theme will be...

I'll be blogging the way through my favorite statistical language/software package. Here's a preview of some of the topics I'll be covering:

- D is for Data Frames - how to create and work with one (April 4)

- I is for the ITEMAN package - an R library for classical item analysis (April 10)

- M is for R Markdown Files - how to create PDFs and webpages (HTML) containing your R code and output plus narrative writeup using R Markdown tools in R Studio (April 14)

- S is for the semPlot package - one of my favorite R packages and winner of the "where have you been all my statistical life?" award (April 21)

For each post, I'll let you know what tools (packages, resources) you'll need. You'll definitely want to install R and R Studio. And this post will help you get started if you're completely new to R. To help keep my blog nice and organized, and aid in finding previous posts, I've also added some new post tags:

- R statistical package - posts about R or that reference the R language/package

- R code - posts that contain R code and/or explain how to code something in R; obviously, every post containing R code will also be tagged as R statistical package, but the reverse is not true

- Psychometrics - posts about psychometrics; many of my posts from this month will deal with using R for psychometric analysis

New tags will likely be added throughout the month, but these are tags I've frankly needed for a while.

One quick note (or disclaimer rather) that I probably should have posted long ago, but I think it's important this month as I begin recommending software, websites, and books on the topic: I write for and maintain this blog as a hobby. I don't make any money from my posts, nor do I receive any benefits for recommending something. So if I recommend a book, a piece of software, etc., it's because I genuinely think that thing is worthy of recommendation, not because it benefits me financially or otherwise. If at any point in the future that changes, I'll be sure to make that especially clear. I also don't currently and have no plans to show ads on the blog. I know others bloggers do to make a bit of money, but ads annoy me and I imagine they annoy you too! (I'm using Blogger as free hosting, though, so it's possible they may begin showing ads. If they do, I imagine I'll finally pony up and pay for hosting somewhere.)

One quick note (or disclaimer rather) that I probably should have posted long ago, but I think it's important this month as I begin recommending software, websites, and books on the topic: I write for and maintain this blog as a hobby. I don't make any money from my posts, nor do I receive any benefits for recommending something. So if I recommend a book, a piece of software, etc., it's because I genuinely think that thing is worthy of recommendation, not because it benefits me financially or otherwise. If at any point in the future that changes, I'll be sure to make that especially clear. I also don't currently and have no plans to show ads on the blog. I know others bloggers do to make a bit of money, but ads annoy me and I imagine they annoy you too! (I'm using Blogger as free hosting, though, so it's possible they may begin showing ads. If they do, I imagine I'll finally pony up and pay for hosting somewhere.)

Good news - Statistics Sunday posts will continue, on topics related to my Blogging A-to-Z posts, since the alphabet schedule generally doesn't include Sundays. This year, because of the way the calendar falls, one BATZ post will be on a Sunday, but other years, they usually give us all Sundays off. Because of that schedule, there will be one day with two statistical posts: the first day of the challenge. Statistical Sins will be on hold for the month.

Friday, March 16, 2018

Psychology for Writers: Knowledge and Perceived Competence

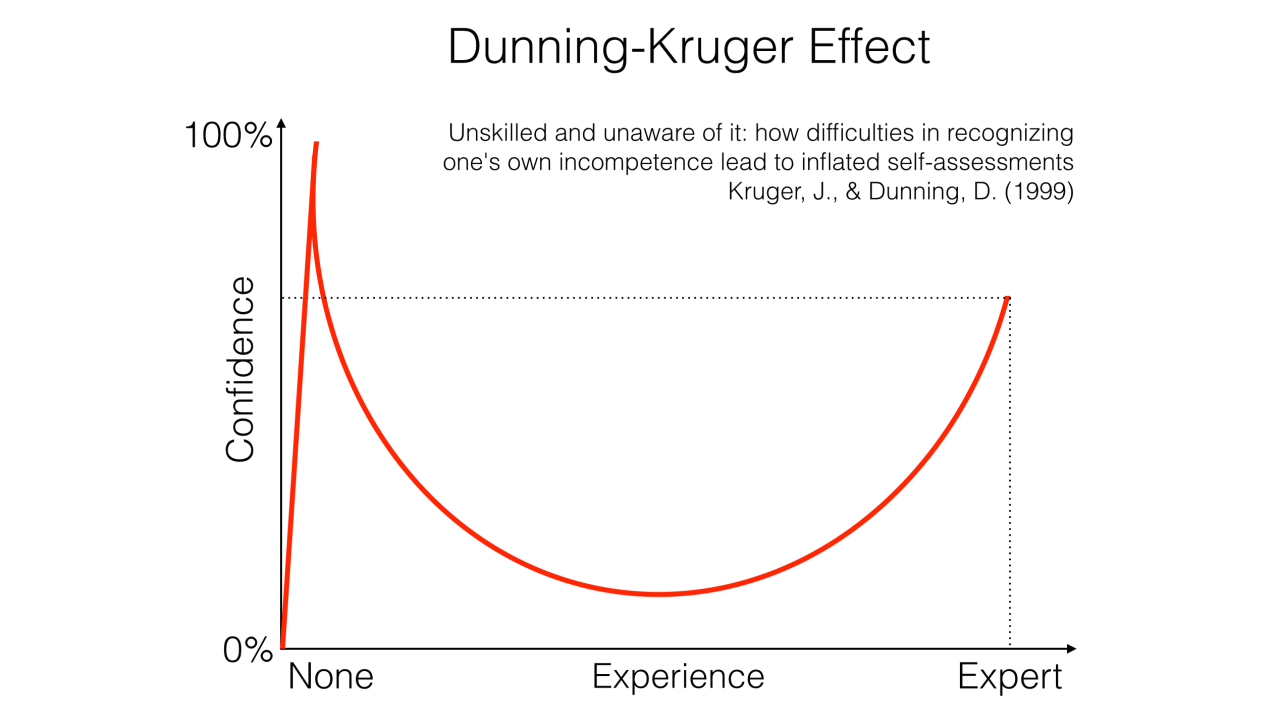

Every once in a while, a psychology theory comes along that is so good, I share it with pretty much everyone, not just fellow psychologists or the psychology-oriented friends. And a great example is the Dunning Kruger effect, which I've blogged about so many times. I share it again today, as a psychology for writers post because I think it is such a good descriptor of human behavior that it could easily influence how you write characters.

The Dunning Kruger effect describes the relationship between actual knowledge and perceived competence (how much knowledge you think you have or how well you know a topic). But to even begin to make accurate ratings on your own competence, you need to know enough about that topic, and, most importantly you need to know just how much you don't know.

If that description doesn't make sense, don't worry - I'm about to break things down. People who know very little about a topic and people who know a lot about a topic often rate their perceived competence very similarly. Why? People who know very little about a topic simply don't know enough to know how much there is to know on a topic. So they may underestimate how much work it takes to become an expert. In essence, they ask "How hard can it be?"

But people who have moderate levels of knowledge on a topic rate their competence much lower - lower than people with high levels of knowledge, yes, but also lower than people with low levels of knowledge. They now have enough knowledge on a topic to be aware of how much more work it would take to become an expert.

If you, like me, prefer to see things plotted out to make sense of them, I offer this graph from a Story.Fund post about the Dunning Kruger effect:

And if you'd like a real life example of the Dunning Kruger effect, I know of no better example than our President, who constantly speaks about topics he knows little about as though he were an expert.

What does this mean for your characters? It explains why complete beginners will often charge into something they know little about - and end up in over their heads. This happens a lot in fiction. But it also means that someone with a moderate amount of competence on something will be extremely cautious and not confident in their abilities. It could explain why someone chooses not to help that brash character that rushes in blindly - they don't have to be uncaring or even have poor self esteem to feel that way. They are simply more aware of their shortcomings and gaps in knowledge.

The Dunning Kruger effect describes the relationship between actual knowledge and perceived competence (how much knowledge you think you have or how well you know a topic). But to even begin to make accurate ratings on your own competence, you need to know enough about that topic, and, most importantly you need to know just how much you don't know.

If that description doesn't make sense, don't worry - I'm about to break things down. People who know very little about a topic and people who know a lot about a topic often rate their perceived competence very similarly. Why? People who know very little about a topic simply don't know enough to know how much there is to know on a topic. So they may underestimate how much work it takes to become an expert. In essence, they ask "How hard can it be?"

But people who have moderate levels of knowledge on a topic rate their competence much lower - lower than people with high levels of knowledge, yes, but also lower than people with low levels of knowledge. They now have enough knowledge on a topic to be aware of how much more work it would take to become an expert.

If you, like me, prefer to see things plotted out to make sense of them, I offer this graph from a Story.Fund post about the Dunning Kruger effect:

And if you'd like a real life example of the Dunning Kruger effect, I know of no better example than our President, who constantly speaks about topics he knows little about as though he were an expert.

What does this mean for your characters? It explains why complete beginners will often charge into something they know little about - and end up in over their heads. This happens a lot in fiction. But it also means that someone with a moderate amount of competence on something will be extremely cautious and not confident in their abilities. It could explain why someone chooses not to help that brash character that rushes in blindly - they don't have to be uncaring or even have poor self esteem to feel that way. They are simply more aware of their shortcomings and gaps in knowledge.

Wednesday, March 14, 2018

Bend and Snap

This is quite possibly the cutest thing ever:

That's right - reporter Lucy Jayne Ford wrote a dissertation on Legally Blonde and was able to give star Reese Witherspoon a copy of it:The moment I handed @RWitherspoon my 15,000 dissertation/love letter to Legally Blonde - and yes, it was scented. I blacked out somewhere between her taking it and Oprah saying 'wow' #WrinkleInTime pic.twitter.com/56XV8FElqV— Lucy Jayne F🌸rd (@lucyj_ford) March 13, 2018

Lucy Jayne Ford, a reporter for Bauer Media in London, was at a press junket to interview Witherspoon and her Wrinkle in Time co-stars Oprah Winfrey and Mindy Kaling, but couldn’t resist the opportunity to show her admiration for Witherspoon.

“I want to start by saying I’m obviously a gigantic fan of all of you; Reese, I actually wrote 15,000 words on you once,” Ford said before handing Reese a copy of her lengthy dissertation and explaining that she had watched the film 800 times to write it.

While Ford conducted her interview after giving Witherspoon the dissertation, Witherspoon made sure to ask her one burning question that Elle Woods most definitely would have approved of before their time ended.

“I have just one question,” Witherspoon said. “Is it scented?”

“I actually put perfume on it before this,” Ford confided.

Statistical Sins: Not Creating a Codebook

I'm currently preparing for Blogging A-to-Z. It's almost a month away, but I've picked a topic that will be fun but challenging, and I want to get as many posts written early as I can. I also have a busy April lined up, so writing posts during that month will be a challenge even if I had picked an easier topic.

I decided to pull out some data I collected for my Facebook study to demonstrate an analysis technique. I knew right away where the full dataset was stored, since I keep a copy in my backup online drive. This study used a long online survey, which was comprised of several published measures. I was going through identifying the variables associated with each measure, and was trying to take stock of which ones needed to be reverse-scored, as well as which ones also belonged to subscales.

I couldn't find that information in my backup folder, but I knew exactly which measures I used, so I downloaded the articles from which those measures were drawn. As I was going through one of the measures, I realized that I couldn't match up my variables with the items as listed. The variable names didn't easily match up and it looked like I had presented the items within the measure in a different order than they were listed in the article.

Why? I have no idea. I thought for a minute that past Sara was trolling me.

I went through the measure, trying to match up the variables, which I had named as an abbreviated version of the scale name followed by a "keyword" from the item text. But the keywords didn't always match up to any item in the list. Did I use synonyms? A different (newer) version of the measure? Was I drunk when I analyzed these data?

I frantically began digging through all of my computer folders, online folders, and email messages, desperate to find something that could shed light on my variables. Thank the statistical gods, I found a codebook I had created shortly after completing the study, back when I was much more organized (i.e., had more spare time). It's a simple codebook, but man, did it solve all of my dataset problems. Here's a screenshot of one of the pages:

As you can see, it's just a simple Word document with a table that gives Variable Name, the original text of the item, the rating scale used for that item, and finally what scale (and subscale) it belongs to and whether it should be reverse-scored (noted with "R," under subscale). This page displays items from the Ten-Item Personality Measure.

Sadly, I'm not sure I'd take the time to do something like this now, which is a crime, because I could very easily run into this problem again - where I have no idea how/why I ordered my variables and no way to easily piece the original source material together. And as I've pointed out before, sometimes when I'm analyzing in a hurry, I don't keep well-labeled code showing how I computed different variables.

But all of this is very important to keep track of, and should go in a study codebook. At the very least, I would recommend keeping one copy of surveys that have annotations (source, scale/subscale, and whether reverse-coded - information you wouldn't want to be on the copy your participants see) and code/syntax for all analyses. Even if your annotations are a bunch of Word comment bubbles and your code/syntax is just a bunch of commands with no additional description, you'll be a lot better off than I was with only the raw data.

I recently learned there's an R package that will create a formatted codebook from your dataset. I'll do some research into that package and have a post about it, hopefully soon.

And I sincerely apologize to past Sara for thinking she was trolling me. Lucky for me, she won't read this post. Unless, of course, O'Reilly Auto Parts really starts selling this product.

I decided to pull out some data I collected for my Facebook study to demonstrate an analysis technique. I knew right away where the full dataset was stored, since I keep a copy in my backup online drive. This study used a long online survey, which was comprised of several published measures. I was going through identifying the variables associated with each measure, and was trying to take stock of which ones needed to be reverse-scored, as well as which ones also belonged to subscales.

I couldn't find that information in my backup folder, but I knew exactly which measures I used, so I downloaded the articles from which those measures were drawn. As I was going through one of the measures, I realized that I couldn't match up my variables with the items as listed. The variable names didn't easily match up and it looked like I had presented the items within the measure in a different order than they were listed in the article.

Why? I have no idea. I thought for a minute that past Sara was trolling me.

I went through the measure, trying to match up the variables, which I had named as an abbreviated version of the scale name followed by a "keyword" from the item text. But the keywords didn't always match up to any item in the list. Did I use synonyms? A different (newer) version of the measure? Was I drunk when I analyzed these data?

I frantically began digging through all of my computer folders, online folders, and email messages, desperate to find something that could shed light on my variables. Thank the statistical gods, I found a codebook I had created shortly after completing the study, back when I was much more organized (i.e., had more spare time). It's a simple codebook, but man, did it solve all of my dataset problems. Here's a screenshot of one of the pages:

Sadly, I'm not sure I'd take the time to do something like this now, which is a crime, because I could very easily run into this problem again - where I have no idea how/why I ordered my variables and no way to easily piece the original source material together. And as I've pointed out before, sometimes when I'm analyzing in a hurry, I don't keep well-labeled code showing how I computed different variables.

But all of this is very important to keep track of, and should go in a study codebook. At the very least, I would recommend keeping one copy of surveys that have annotations (source, scale/subscale, and whether reverse-coded - information you wouldn't want to be on the copy your participants see) and code/syntax for all analyses. Even if your annotations are a bunch of Word comment bubbles and your code/syntax is just a bunch of commands with no additional description, you'll be a lot better off than I was with only the raw data.

I recently learned there's an R package that will create a formatted codebook from your dataset. I'll do some research into that package and have a post about it, hopefully soon.

And I sincerely apologize to past Sara for thinking she was trolling me. Lucky for me, she won't read this post. Unless, of course, O'Reilly Auto Parts really starts selling this product.

Monday, March 12, 2018

If This is Your First Time at Write Club, You Have to Write

I'm unfortunately two short stories behind on my goal. But today I learned about a writing contest that might help me get back on track:

Here’s the ABC’s of how it works. Anytime during the submission period (Mar 12-Apr 1), you simply send in a 500-word writing sample using a pen name (details on how to do that below). Once the submission period closes, all the entries are read by a panel of fifteen volunteers (I call them my slushpile readers). The slushpile readers each select their top samples and then the contestant pool narrows down to the thirty contestants picked by the most judges. Over the course of the next eight weeks, we’ll hold daily bouts (M-F) right here on this blog – randomly pitting the anonymous 500-word writing samples against each other. The winners of these bouts advance into elimination rounds, and then playoffs, quarter-finals, and then ultimately a face-off between two finalists to determine a single champion. The writing sample can be any genre, any style (even poetry), from a larger piece of work or flash fiction -- the word count being the only restriction. It’s a way to get your writing in front of a lot of readers, receive a ton of feedback, all without having to suffer the agony and embarrassment of exposure. How cool is that?Now to figure out what 500 word sample to submit. Obviously, it needs to be something that could stand on its own without the larger context of the story or novel from which it was drawn. I have until April 1st to figure this out.

And how are the winners of each bout determined? By you and other WRiTE CLUB readers! Anyone who visits my blog during the contest can vote for the writing sample that resonates with them the most in a bout. All I ask is that you leave a brief critique of each piece to help the contestants improve their craft.

Sunday, March 11, 2018

Statistics Sunday: Factor Analysis and Psychometrics

Last Sunday, I provided an introduction to factor analysis - an approach to measurement modeling. As you can imagine, because of its use in measurement, factor analysis is a tool of many psychometricians, which can be used in the course of measurement development to help establish validity of the measure.

Factor analysis is frequently considered an approach of classical test theory, though, rather than of an item response theory approach, so it isn't the primary approach to checking dimensionality for many of measures I've worked on. In classical test theory, the focus is on the complete measure as a unit. Only by including all of the items can one achieve the reliability and validity established in the development and standardization research on the measure. Factor analysis, while providing information on each item's individual contribution to the measurement of a concept, also places the focus on the complete set of items - and these items only - as the way to tap into the factor or latent variable.

Item response theory approaches (and for the moment, I'm including Rasch - which I know will ruffle some feathers), on the other hand, consider the relationship of individual items to the concept being measured, and each item has corresponding statistics concerning how (and how well) it measures the concept. The items a person receives can be considered a sample of all potential items on that concept. (And in fact, the items in the item bank can be considered a sample of all potential items, written and unwritten.) Yes, there are stipulations that affect the gestalt of overall measure a person completes - guidelines about how many items they should respond to in order to adequately measure the concept, and so on - but there are few stipulations about the specific items themselves. It is because of item response theory approaches that we are able to have computer adaptive testing. If we adopted the classical test theory approach of a measure as a specific and unchanging combination of items, we would not be able to justify an approach where different people receive different items.

Though factor analysis represents a classical test theory approach, it is still an important one - particularly confirmatory factor analysis, which encourages measurement developers to specify a priori where a particular item belongs. For this reason, it isn't unusual to see psychometricians who use Rasch or Item Response Theory to include CFA in their analysis. In fact, in some psychometrics consulting work I did some years back, I was asked to do just that. But this approach only makes sense when working with fixed form measures - it would be difficult to conduct CFA on item banks used in adaptive testing, since these contain potentially thousands of items, and no single individual would ever be able to complete all of these items.

The Rasch program I predominantly use, Winsteps, uses a data-driven measurement approach called principal components analysis (which I'll blog about sometime soon!). The purpose of a measurement model in this context is simply to ensure the items being analyze don't violate a key assumption of Rasch, which is that items measure a single concept (i.e., that they are unidimensional items). But people can, and often do, ignore these results completely, particularly if they have other evidence supporting the validity and unidimensionality of the concepts included on the measure, such as from a content validation study. In fact, Rasch itself is a measurement model, and so anyone engaging in this kind of analysis is already dealing with latent variables, even if they ignore the principal components analysis results. When conducting Rasch, many other statistics are provided that could help to weed out bad items that don't fit the model.

Look for more posts on this topic (and related topics) in the future!

Factor analysis is frequently considered an approach of classical test theory, though, rather than of an item response theory approach, so it isn't the primary approach to checking dimensionality for many of measures I've worked on. In classical test theory, the focus is on the complete measure as a unit. Only by including all of the items can one achieve the reliability and validity established in the development and standardization research on the measure. Factor analysis, while providing information on each item's individual contribution to the measurement of a concept, also places the focus on the complete set of items - and these items only - as the way to tap into the factor or latent variable.

Item response theory approaches (and for the moment, I'm including Rasch - which I know will ruffle some feathers), on the other hand, consider the relationship of individual items to the concept being measured, and each item has corresponding statistics concerning how (and how well) it measures the concept. The items a person receives can be considered a sample of all potential items on that concept. (And in fact, the items in the item bank can be considered a sample of all potential items, written and unwritten.) Yes, there are stipulations that affect the gestalt of overall measure a person completes - guidelines about how many items they should respond to in order to adequately measure the concept, and so on - but there are few stipulations about the specific items themselves. It is because of item response theory approaches that we are able to have computer adaptive testing. If we adopted the classical test theory approach of a measure as a specific and unchanging combination of items, we would not be able to justify an approach where different people receive different items.

Though factor analysis represents a classical test theory approach, it is still an important one - particularly confirmatory factor analysis, which encourages measurement developers to specify a priori where a particular item belongs. For this reason, it isn't unusual to see psychometricians who use Rasch or Item Response Theory to include CFA in their analysis. In fact, in some psychometrics consulting work I did some years back, I was asked to do just that. But this approach only makes sense when working with fixed form measures - it would be difficult to conduct CFA on item banks used in adaptive testing, since these contain potentially thousands of items, and no single individual would ever be able to complete all of these items.

The Rasch program I predominantly use, Winsteps, uses a data-driven measurement approach called principal components analysis (which I'll blog about sometime soon!). The purpose of a measurement model in this context is simply to ensure the items being analyze don't violate a key assumption of Rasch, which is that items measure a single concept (i.e., that they are unidimensional items). But people can, and often do, ignore these results completely, particularly if they have other evidence supporting the validity and unidimensionality of the concepts included on the measure, such as from a content validation study. In fact, Rasch itself is a measurement model, and so anyone engaging in this kind of analysis is already dealing with latent variables, even if they ignore the principal components analysis results. When conducting Rasch, many other statistics are provided that could help to weed out bad items that don't fit the model.

Look for more posts on this topic (and related topics) in the future!

Thursday, March 8, 2018

The Art of Conversation

There are many human capabilities we take for granted, until we try to create artificial intelligence intended to mimic these human capabilities. Even an unbelievably simple conversation requires attention to context and nuance, and an ability to improvise, that is almost inherently human. For more on this fascinating topic, check out this article from The Paris Review, in which Mariana Lin, writer and poet, discusses creative writing for AI:

If the highest goal in crafting dialogue for a fictional character is to capture the character’s truth, then the highest goal in crafting dialogue for AI is to capture not just the robot’s truth but also the truth of every human conversation.Not only does she question how we can use the essence of human conversation to reshape AI, she questions how AI could reshape our use of language:

Absurdity and non sequiturs fill our lives, and our speech. They’re multiplied when people from different backgrounds and perspectives converse. So perhaps we should reconsider the hard logic behind most machine intelligence for dialogue. There is something quintessentially human about nonsensical conversations.

Of course, it is very satisfying to have a statement understood and a task completed by AI (thanks, Siri/Alexa/cyber-bot, for saying good morning, turning on my lamp, and scheduling my appointment). But this is a known-needs-met satisfaction. After initial delight, it will take on the shallow comfort of a latte on repeat order every morning. These functional conversations don’t inspire us in the way unusual conversations might. The unexpected, illumed speech of poetry, literature, these otherworldly universes, bring us an unknown-needs-met satisfaction. And an unknown-needs-met satisfaction is the miracle of art at its best.

The reality is most human communication these days occurs via technology, and with it comes a fiber-optic reduction, a binary flattening. A five-dimensional conversation and its undulating, ethereal pacing is reduced to something functional, driven, impatient. The American poet Richard Hugo said, in the midcentury, “Once language exists only to convey information, it is dying.”

I wonder if meandering, gentle, odd human-to-human conversations will fall by the wayside as transactional human-to-machine conversations advance. As we continue to interact with technological personalities, will these types of conversations rewire the way our minds hold conversation and eventually shape the way we speak with each other?

Wednesday, March 7, 2018

Statistical Sins: Gender and Movie Ratings

Though I try to feature my only content/analysis/thoughts in my statistics posts, occasionally, I encounter a really well-done analysis that I'd rather feature instead. So today, for my statistical sins post, I encourage you to check out this excellent analysis from FiveThirtyEight that uncovers what would qualify as a statistical sin. You see, when conducting opinion polling, it's important to correct for discrepancies between the characteristics of a sample versus population, characteristics like gender. But apparently, IMDb ratings also show discrepancies, where men often outnumber women in rating different movies, sometimes as much as 10-to-1. And if you want to put together definitive lists of best movies, you either need to caveat the drastic differences between population and raters, or make it clear that the results are heavily skewed by one gender.

In the article, a table of the top 500 movies (based on weighted data) demonstrates how gender information impacts these rankings - for each movie, the following are provided: what the movie is currently rated, how it would be rated based on women or men only, and how it would be rated when data are weighted to reflect discrepancies in the proportion of men and women. Movies like The Shawshank Redemption (#1) and The Silence of the Lambs (#23) would generally remain mostly unchanged. Movies like Django Unchained (#60) and Harry Potter and the Deathly Hallows: Part 2 (#218) would move up to #34 and #50, respectively, while Seven Samurai (#19) and Braveheart (#75) would move down to #59 and #112, respectively. And finally, movies that never made it on to the top 250 list, like Slumdog Millionaire and The Nightmare Before Christmas, would have rankings of #186 and #199, respectively.

The Academy Awards rightly get criticized for reflecting the preferences of a small, unrepresentative sample of the population, but online ratings have the same problem. Even the vaunted IMDb Top 250 — nominally the best-liked films ever — is worth taking with 250 grains of salt. Women accounted for 52 percent of moviegoers in the U.S. and Canada in 2016, according to the most recent annual study by the Motion Picture Association of America. But on the internet, and on ratings sites, they’re a much smaller percentage.

We’ll start with every film that’s eligible for IMDb’s Top 250 list. A film needs 25,000 ratings from regular IMDb voters to qualify for the list. As of Feb. 14, that was 4,377 titles. Of those movies, only 97 had more ratings from women than men. The other 4,280 films were mostly rated by men, and it wasn’t even close for all but a few films. In 3,942 cases (90 percent of all eligible films), the men outnumbered the women by at least 2-to-1. In 2,212 cases (51 percent), men outnumbered women more than 5-to-1. And in 513 cases (12 percent), the men outnumbered the women by at least 10-to-1.

Looking strictly at IMDb’s weighted average — IMDb adjusts the raw ratings it gets “in order to eliminate and reduce attempts at vote stuffing,” but it does not disclose how — the male skew of raters has a pretty significant effect. In 17 percent of cases, the weighted average of the male and female voters was equal, and in another 26 percent of cases, the votes of the men and women were within 0.1 points of one another. But when there was bigger disagreement — i.e. men and women rated a movie differently by 0.2 points or more, on average — the overall score overwhelmingly broke closer to the men’s rating than the women’s rating. The score was closer to the men’s rating more than 48 percent of the time and closer to the women’s rating less than 9 percent of the time, meaning that when there was disagreement, the male preference won out about 85 percent of the time.

In the article, a table of the top 500 movies (based on weighted data) demonstrates how gender information impacts these rankings - for each movie, the following are provided: what the movie is currently rated, how it would be rated based on women or men only, and how it would be rated when data are weighted to reflect discrepancies in the proportion of men and women. Movies like The Shawshank Redemption (#1) and The Silence of the Lambs (#23) would generally remain mostly unchanged. Movies like Django Unchained (#60) and Harry Potter and the Deathly Hallows: Part 2 (#218) would move up to #34 and #50, respectively, while Seven Samurai (#19) and Braveheart (#75) would move down to #59 and #112, respectively. And finally, movies that never made it on to the top 250 list, like Slumdog Millionaire and The Nightmare Before Christmas, would have rankings of #186 and #199, respectively.

Tuesday, March 6, 2018

Today in "Evidence for the Dunning-Kruger Effect"

A new study shows that watching videos of people performing some skill can result in the illusion of skill acquisition, adding yet more evidence to the "how hard can it be?" mindset outlined in the Dunning-Kruger effect:

During the Olympics, when you watch athletes at the top of their game performing tasks almost effortlessly, it's easy to think the tasks aren't as challenging as they actually are. Based on these study results, even having people simply put on a pair of ice skates or stand on a snowboard might be enough for them to realize just how difficult skating can actually be.

Just to help put things into perspective, here's a supercut of awesome stunts followed by a person demonstrating why you should not try them at home:

Although people may have good intentions when trying to learn by watching others, we explored unforeseen consequences of doing so: When people repeatedly watch others perform before ever attempting the skill themselves, they may overestimate the degree to which they can perform the skill, which is what we call an illusion of skill acquisition. This phenomenon is potentially important, because perceptions of learning likely guide choices about what skills to attempt and when.In the experiments, participants watched videos of the tablecloth trick (pulling a tablecloth off a table without disturbing dishes; experiments 1 and 5), throwing darts (experiment 2), doing the moonwalk (experiment 3), mirror-tracing (tracing a path through a maze displayed at the top of the screen in a blank box just below it; experiment 4), and juggling bowling pins (experiment 6). Through their research, the authors isolated the missing element in learning by watching - feeling the actual performance of the task. In the 6th experiment, simply getting a taste of the feelings involved - holding the pins that would be used in juggling without attempting to juggle themselves - changed ratings of skill acquisition.

In six experiments, we explored this hypothesis. First, we tested whether repeatedly watching others increases viewers’ belief that they can perform the skill themselves (Experiment 1). Next, we tested whether these perceptions are mistaken: Mere watching may not translate into better actual performance (Experiments 2–4). Finally, we tested mechanisms. Watching may inflate perceived learning because viewers believe that they have gained sufficient insight from tracking the performer’s actions alone (Experiment 5); conversely, experiencing a “taste” of the performance should attenuate the effect if it is indeed driven by the experiential gap between seeing and doing (Experiment 6).

During the Olympics, when you watch athletes at the top of their game performing tasks almost effortlessly, it's easy to think the tasks aren't as challenging as they actually are. Based on these study results, even having people simply put on a pair of ice skates or stand on a snowboard might be enough for them to realize just how difficult skating can actually be.

Just to help put things into perspective, here's a supercut of awesome stunts followed by a person demonstrating why you should not try them at home:

Monday, March 5, 2018

Hamildrop - How Lucky We Are To Be Alive Right Now

If you haven't yet listened to The Hamilton Polka, why?

Now you have no excuse:

It must be nice, it must be nice to have so many talented friends. This is the second Hamildrop - expect one each month this year! (That's right - there will be 10 more!) And so you don't miss the next Hamildrop - check here and just you wait.

Now you have no excuse:

It must be nice, it must be nice to have so many talented friends. This is the second Hamildrop - expect one each month this year! (That's right - there will be 10 more!) And so you don't miss the next Hamildrop - check here and just you wait.

Sunday, March 4, 2018

Statistics Sunday: Introduction to Factor Analysis

One of the first assumptions of any measurement model is that all items included in a measure are assessing the same thing. In measurement, we refer to this thing being measured as a construct - also known as a latent variable or factor.

One method of checking that the items are assessing this factor (or these factors) is through factor analysis. This statistical approach is one of the ways of seeing if items "hang together" in such a way that they appear to measure the same thing. It does this by looking at covariance of the items - shared variance between items. Covariance is basically a combination of correlation and variance.

Factor analysis can be conducted in two ways - exploratory, a data-driven approach that separates items into factors based on combinations of items suggested by the data, and confirmatory, where the person conducting the analysis specifies which items measure which factors. Data-driven approaches are tricky, of course. You don't know what portion of the covariance is systematic and what portion is error. Exploratory factor analysis (EFA) doesn't care.

This is why, for the most part, I prefer confirmatory factor analysis (CFA), where you specify, based on theory and some educated guesses, which items fall under which factor. As you might have guessed, you can conduct a factor analysis (exploratory or confirmatory) with any number of factors. You would specify your analysis as single factor, two-factor, etc. In fact, you can conduct EFA by specifying the number of factors the program should extract. But keep in mind, the program will try to find something that fits, and if you tell it there should be 2 factors, it will try to make that work, even if the data suggest the number of factors is not equal to 2.

Whether you're conducting EFA or CFA, the factors are underlying, theoretical constructs (also known as latent variables). These factors are assessed by observed variables, which might be self-reported ratings on a scale of 1 to 5, judge ratings of people's performances, or even whether the individual responded to a knowledge item correctly. Basically, you can include whatever items you think hang together, with whatever scales they use in measurement. In the measurement model above, observed variables are represented by squares.

While the observed variables have a scale, the factors do not - they are theoretical and not measured directly. Therefore, analysis programs will request you to select or select on their own (through convention) one of the items connected to the factor to give it a scale. (Most programs will, by default, fix the first item at 1, meaning a perfect relationship between that item and the factor, to give the factor a scale.) The remaining factor loadings - measures of the strength of the relationship between the observed variable and the factor - will be calibrated to be on the same scale as the fixed item. In the model above, factors are represented by circles. The double-ended arrows going between the three factors represent correlations between these factors. And the arrows pointing back at an individual factor or observed variables refers to error - variance not explained by the model.

When conducting a factor analysis, you can (ahem, should) request standardized loadings, which like correlations, range from -1 to +1, with values closer to 1 (positive or negative) reflecting stronger relationships. Basically, the closer to the absolute value of 1, the better that item measures your factor.

In addition to factor loadings, telling how well an item maps onto a factor, there are overall measures that tell you how well your model fits the data. More on this information, and how to interpret it, later!

In other news, I'm looking at my previous blog posts and putting together some better labels, to help you access information more easily. Look for those better tags, with a related blog post, soon!

|

| The measurement model, a 3 factor model, from last week's Statistics Sunday post |

Factor analysis can be conducted in two ways - exploratory, a data-driven approach that separates items into factors based on combinations of items suggested by the data, and confirmatory, where the person conducting the analysis specifies which items measure which factors. Data-driven approaches are tricky, of course. You don't know what portion of the covariance is systematic and what portion is error. Exploratory factor analysis (EFA) doesn't care.

This is why, for the most part, I prefer confirmatory factor analysis (CFA), where you specify, based on theory and some educated guesses, which items fall under which factor. As you might have guessed, you can conduct a factor analysis (exploratory or confirmatory) with any number of factors. You would specify your analysis as single factor, two-factor, etc. In fact, you can conduct EFA by specifying the number of factors the program should extract. But keep in mind, the program will try to find something that fits, and if you tell it there should be 2 factors, it will try to make that work, even if the data suggest the number of factors is not equal to 2.

Whether you're conducting EFA or CFA, the factors are underlying, theoretical constructs (also known as latent variables). These factors are assessed by observed variables, which might be self-reported ratings on a scale of 1 to 5, judge ratings of people's performances, or even whether the individual responded to a knowledge item correctly. Basically, you can include whatever items you think hang together, with whatever scales they use in measurement. In the measurement model above, observed variables are represented by squares.

While the observed variables have a scale, the factors do not - they are theoretical and not measured directly. Therefore, analysis programs will request you to select or select on their own (through convention) one of the items connected to the factor to give it a scale. (Most programs will, by default, fix the first item at 1, meaning a perfect relationship between that item and the factor, to give the factor a scale.) The remaining factor loadings - measures of the strength of the relationship between the observed variable and the factor - will be calibrated to be on the same scale as the fixed item. In the model above, factors are represented by circles. The double-ended arrows going between the three factors represent correlations between these factors. And the arrows pointing back at an individual factor or observed variables refers to error - variance not explained by the model.

When conducting a factor analysis, you can (ahem, should) request standardized loadings, which like correlations, range from -1 to +1, with values closer to 1 (positive or negative) reflecting stronger relationships. Basically, the closer to the absolute value of 1, the better that item measures your factor.

In addition to factor loadings, telling how well an item maps onto a factor, there are overall measures that tell you how well your model fits the data. More on this information, and how to interpret it, later!

In other news, I'm looking at my previous blog posts and putting together some better labels, to help you access information more easily. Look for those better tags, with a related blog post, soon!

Saturday, March 3, 2018

Myers-Brigged, 2.0

I've been feeling different lately - for a variety of reasons I won't go into - and just out of curiosity, decided to take the Myers-Briggs again. I'm a social psychologist, after all, and therefore think personality is malleable. So imagine my surprise when I got basically the same result:

The only difference from my previous test is that I'm "turbulent" as opposed to "assertive". Hmmm...

The only difference from my previous test is that I'm "turbulent" as opposed to "assertive". Hmmm...

Subscribe to:

Posts (Atom)