As I've said many times in my statistics posts, we collect data from samples and conduct our statistical analyses to estimate underlying population values - the "true state of the world." Bayesian statistics is a different theoretical approach to statistics, where prior information about something is taken into account to derive degrees of belief about the true state of the world. For instance, a friend of mine likes to play a game in her Chicago neighborhood called "gun shots or fireworks?" ("Like" is probably too strong a word.) You can bring Bayesian inference into the probability of a sudden noise being either a gun shot or fireworks based on, for instance, the time of year (fireworks are more likely close to July 4th but less likely on, say, November 18th) or patterns of gun crime in a neighborhood. In Bayesian terms, this information is taken into account as your "priors."

There's more to Bayesian statistics than this, and since it's an approach I'm still learning, I'll probably write more about it in the future. But today, I wanted to discuss the theorem that underlies this entire approach to statistics: Bayes' theorem, which deals with conditional probability.

Put simply, conditional probability refers to the likelihood of one thing happening given another thing has happened (which we notate as P[One thing|Another Thing]). For instance, if a student is taking a test, she could correctly answer a question because she knew the answer or because she guessed. For a multiple choice test, we can very easily figure out the probability of getting a correct answer via guessing: 1/the number of choices (so for a question with 5 choices, the probability of correctly guessing is 1/5). And if you had previous information about the likelihood a student has of knowing the material, you could easily figure out the probability that she guessed on any particular question - using Bayes' theorem.

I've seen Bayes' theorem take on a few different forms, which are equivalent. Because this really wouldn't be an article about Bayes' theorem without telling you Bayes' theorem (which is mathematical), here it is:

I've provided all 3 forms, though the last one is the one I'm most familiar with. The little c next to A refers the complement - basically Not A. So if A is the likelihood the student knew the answer to the question, then Not A is the opposite, the likelihood the student didn't know the answer, which is equal to 1-A.

The thing about Bayes' theorem is that it comes into play in many statistical situations, even when people don't realize it. Let's use a rather famous example. This particular version I'm citing comes from Sheldon Ross's Introduction to Probability Models, but I've seen versions in many places:

Let's say your doctor gives you a lab test to see if you have a certain form of cancer. The test is 95% effective in detecting this cancer. But it has a false positive rate of 1%, meaning that even if you're healthy, there's a 1% probability that you'll get a positive result). Less than 0.5% (half a percent) of the population has this form of cancer.

Your doctor tells you your test is positive. What's the chance that you really do have cancer?

Hint: If you said 95% or 99%, you're wrong. Why? Because Bayes' theorem.

We are trying to determine the probability you have cancer (C) given that your test was positive (T) - P(C|T), read as probability of C given T. C is equal to the chance of having this form of cancer (0.005). And the probability of getting a positive result given that you have cancer - P(T|C) - is 0.95, the effectiveness of the test. So now we plug in the values from above:

If you multiply this out, you get about 0.323. So the chance that you have cancer given your test result was positive is just a little more than 32%. See, the mistake people make is believe to that T given C (the effectiveness of the test at detecting whether a person has cancer) means the same thing as C given T (the chance that your test was positive because you have cancer). But as you can see from this math problem, it isn't the same thing.

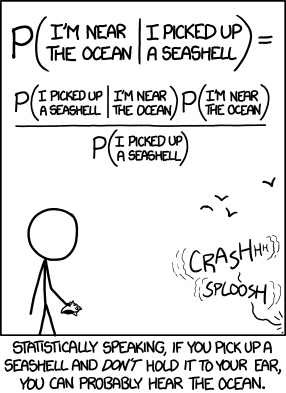

Or here's Bayes explained by XKCD:

So there it is: your introduction to Bayes' theorem! Hope you enjoyed it!

I'm currently reading a lot about probability, for my Camp NaNoWriMo project that I'm hoping will become a book about statistics. During April A to Z, where I had to write about a more advanced statistical concept (alpha) first, I had to approach this concept differently, using it as a way to demonstrate why we teach basic probability in a statistics class. And in writing this material and thinking about it in this way, I realized that the way we teach probability in statistics is, in my view, ineffective and far too basic. It doesn't delve enough into probability to give you a good understanding of it, and it rarely explains why you even need to learn probability at all.

That concept becomes clearer later on, but if you don't know why you're going to need to know something later, you don't usually pay attention to it. And I'm a big believer in giving good, clear answers to the question, "When am I ever going to need this?" In fact, that topic is usually the subject of my first day lecture when I teach Introductory Statistics as well as Research Methods.

So that's something I hope to do differently in my project. Wish me luck, because much of this is brand new territory for me.

No comments:

Post a Comment