A friend sent me a copy of this collection of stories,

Stories of Your Life and Others, and I just finished reading it over the weekend. You may not be familiar with the author, Ted Chiang, but you're probably familiar with a movie based on his work:

Arrival. Now that I have a bit of time on my hands, I thought I'd sit down and write a quick review. (I also hope to write reviews for the two other books I finished recently:

Sleeping Beauties and

Weapons of Math Destruction, so stay tuned.)

Tower of Babylon - Unlike the other stories, which take place in a similar time period as our own (perhaps in the not-too-distant future), this story takes place in the distant past: the building of the tower of Babylon. The tower has been built, and miners have been brought in to complete the goal: to mine into the vault of Heaven. The story is told from the viewpoint of one of the miners, as he and his colleagues make their way to the top of the tower. It takes 4 months to reach the top. Communities are built at different points in the tower, and gardens are built to provide food. The miner is skeptical as to whether this undertaking is a good idea, while the builders have no doubts, and those who live on the tower have no desire to go back down to earth. I really felt the creeping discomfort as they went higher in the tower (probably in part because I'm afraid of heights).

Despite taking place in the past, the story explores a more modern issue that comes up with regard to science and technology: just because you

can do something doesn't mean that you

should.

Understand - This is the standout of the collections, though I enjoyed all of the stories. Leon Greco fell into a frozen lake and spent an hour underwater. But he is saved from braindeath by Hormone K, an experimental treatment for people with brain damage. Not only does he recover, he comes back with more cognitive ability than he had before. He volunteers for a study of Hormone K, so that he can receive more, and becomes addicted to his increased intelligence. As his abilities increase, he is able to get control over his own meta-cognition, suddenly able to literally program his brain.

The story is a great example of speculative fiction: what would happen if we suddenly become this intelligent? He reaches a point where he doesn't sleep, just rests and hallucinates (which is what happens to us when we're deprived of sleep too long; one could argue dreams are phenomenologically similar to hallucinations). This makes a lot of sense to me: Leon reaches a point where he has control over every system of his body, essentially making his entire brain capable of executive function (a much cooler and more accurate version of the "we only use X% of our brain"), but the cost of that may be that he needs to maintain conscious control over all of his bodily functions, like heartrate and breathing.

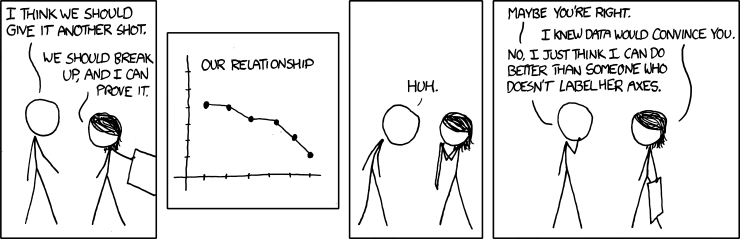

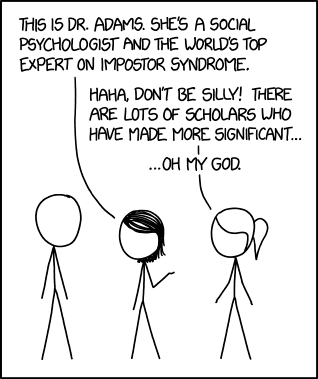

One of many things I got out of this story is the issue with such extreme levels of intelligence (and one could probably argue, this issue is also true for people of much lower levels of intelligence): there's no persuading them. To persuade someone, they have to, at some level, think they could be wrong. If a person doesn't recognize that they're wrong, either because of inability to consider other viewpoints or because of extreme intelligence, there's no way to change their mind.

In fact, this issue of communication and trying to make another person

understand your viewpoint is a recurring theme in this collection. When you are finally able to understand and see something the way the other person does, it changes you.

Division by Zero - This story follows the lives and marriage of two academics: a mathematician and a biologist, showing how our mental life can strongly impact our emotional and romantic life. The mathematician makes a discovery that rocks her entire worldview, and she tries to help her husband understand while also dealing with the aftermath of her discovery. It explores the cost of discovery, touching back on the issue of

can do something versus

should. Any more information about the story would probably spoil it, since it's a short read.

Story of Your Life - This is the story on which

Arrival is based. Many elements from the story made their way into the movie, though there are a lot of big differences. I really enjoyed learning more about the heptopods and their language; the story answers a few of the key questions I had after watching the movie. I could also understand some of the differences, and why they changed different aspects for the movie. I'd highly recommend anyone who saw the movie, regardless of whether you liked it, read this story.

Seventy-Two Letters - This story blends science and technology with religion and fantasy. It follows Robert, who builds simple golem (clay figures brought to life with a name) as a child and in his adulthood, creates complex golem that can take on many human tasks. The key issue here seems to be that gray area between

can and

should. Every new technology has consequences, many of them unintended. But the key question, that doesn't have an easy answer, is: do the consequences outweigh the benefits?

I recognized at the very beginning of the story that this was about golem (full disclosure: I know what golem are and how they work from an episode of

Supernatural, not because I'm some expert). While I thought it was a cool idea, I was a bit disappointed with this story. It didn't feel as cohesive as the other stories in the collection.

The Evolution of Human Science - The shortest of the collection, this story is kind of a follow-up to

Understand. A drastic increase in cognitive ability has created a new species: metahumans. Humans, long the most intelligence creatures, have to now deal with a more intelligent lifeform. Metahumans go in their own direction regarding scientific inquiry, and share little with humans, because humans don't have the ability to understand. It's the proverbial question in most close encounter literature: would more intelligent lifeforms even bother with us or would they view communication with us in the same way we would view communicating with a cockroach?

Hell is the Absence of God - A great example of world-building in a short story, an ability I greatly admire. In this alternate reality, angelic visitation, miracle cures, and death by literal "act of God" are commonplace. The story follows three people impacted by angelic visitation: one whose wife died during a visitation, one who was given a deformity then later healed of it by two angelic visitations, and one trying to find his own purpose in life following his own angelic visitation. Souls are visible, as is their destination (Heaven or Hell), which has both positive and negative consequences for many people in the story. I don't want to say too much that might spoil this story. But one of the things I noticed is that there is actually very little dialogue in the story; instead, the narrator paraphrases many conversations. I wasn't sure I'd like that, but it helped keep the story going at an even pace. I might have to explore dialogue-free writing myself.

Liking What You See: A Documentary - Another excellent example of speculative fiction, that I could see turned into an episode of

Black Mirror. (Bad news, that won't happen because, good news, I just learned this story is

being adapted for AMC.) Scientists have figured out how to remove value judgments based on appearance, essentially removing the emotional reaction we get when looking at things that are beautiful or ugly. What they are doing is inducing a kind of agnosia (loss of an ability, usually due to brain damage), which they call "calli." This is short for calliagnosia, which I don't think is a real thing, though a related agnosia they discuss, prosopagnosia, the loss of the ability to recognize human faces, is a real thing. The story is the text of interviews with people on different sides of the calli debate - should people do it or not? - as well as news stories covering the topic. The focus is on a single community and the vote by community members as to whether the procedure should be required.

Overall, you can see Ted Chiang's training as a computer scientist, and love of math, work its way into his fiction. But he also shows a strong appreciation for linguistics, and how words have power over our way of thinking and direction in life. This is seen in

Story of Your Life, but also

Understand and

Seventy-Two Letters. His stories also focus heavily on how technology affects us, and the thin line between technology used for good and technology used for evil. I'm looking forward to reading more of his work!